Semantic Image Segmentation. Think of building a classification model for each pixel in the image with the choice for label. Segmentation use in Machine Learning models is growing.

Annotating with the pixel-level precision can be a challenging task, that is why only a handful of image annotation and data labeling companies include it in their data offering. It is typically also the most expensive service on the market, with many ambiguously charging per image vs a standard object-based pricing model. While object detection, and its bounding boxes for computer vision is predominant annotation technique still, segmentation is a growing as it allows precise image analysis and may be used in further image modification.

One of the popular use cases were a Kaggle Carvana Masking challenge. As a classic challenge, the idea behind was to mask out backgrounds, noisy parts of the photos that get uploaded in the car-sale website by users? Such photography enhancement strategies is definitely a game changer for photography and web industry.

So how to make image segmentation be pixel level and ensure that the foundation of it – polygonal annotation – would translate the full masks with no pixel lost?

Case study Semantic Segmentation for the photography company

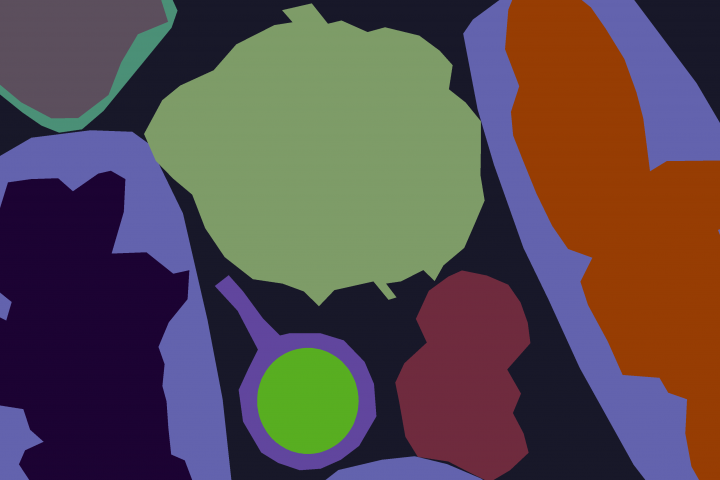

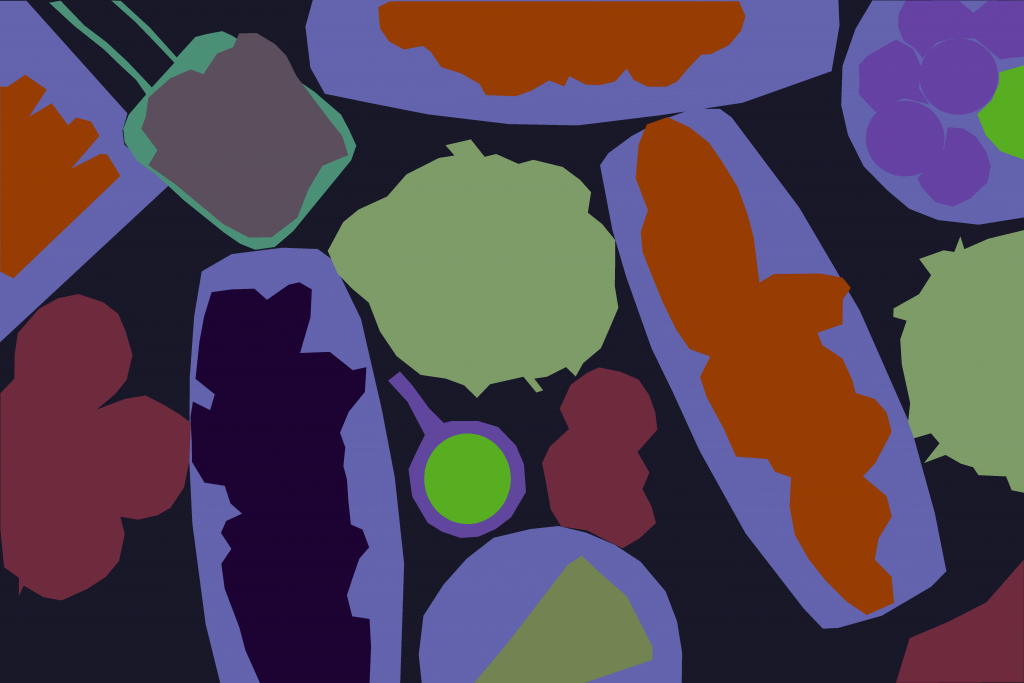

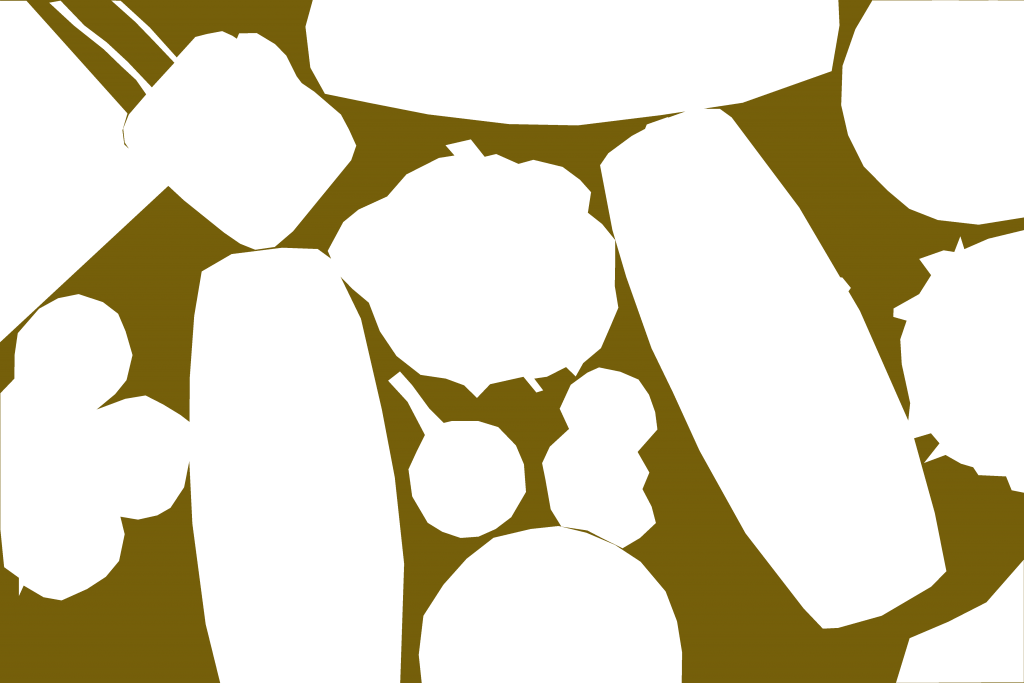

We prepared the data set for the company to be able to brush up thousands of photographs shot as dining advertisement at restaurants. We encountered a challenge for this particular segmentation of the image, as it required defining layers. While in most of the aerial images, the set classes do not overlap, i.e. buildings, vegetation, road; for the case of the photography segmentation, or any street view camera – indexing tools become useful. Each class is not only get an assign colored mask, but also an index. Thus, background inherit automatically the lowest index, and does not need to be annotated, and the rest get assigned *1, *2, *3 with the order of sequence required. In food photography, our choices were:

Background – or 0 index class. Plates, packaging, bowls as a class – or – 1 – level class. Food as a class – or 2- level class.

By using indexing vs any other classic solutions such as calculation of the coordinates of the objects, we were able to solve the problems where the objects on the foreground are larger than the rest. Indexing allowing to substract higher index classes from lower, and ensures there are no double lines or lost pixel challenge.

I

Full image with classes and masks: background, sauce, bowl, plate, desert, basket

First, we draw polygons of respective lower level classes, and thus defining background mask

Packaging, containers, plates, therefore, get assign *1 index (following background)

Same *1 goes to the class of bowl to allow to train the model by substracting the labeled sauce in

Lastly, the food placed on the plate got assigned index *2, yet the process may go even further.