Introducing COCO Format Annotation for Semantic Segmentation and Object Detection Deep Learning

When shape matters. COCO library started with a handful of enthusiasts but currently has grown into substantial image dataset.

Importantly, over the years of publication and git, it gained a number of supporters from big shops such as Google, Facebook and startups that focus on segmentation and polygonal annotation for their products, such as Mighty AI.

Why COCO? Inter-operability and optimizing results with open sourced millions of images matter, clearly, in training your machine learning model. Here are a few reasons on why we are also releasing the COCO format for annotation results:

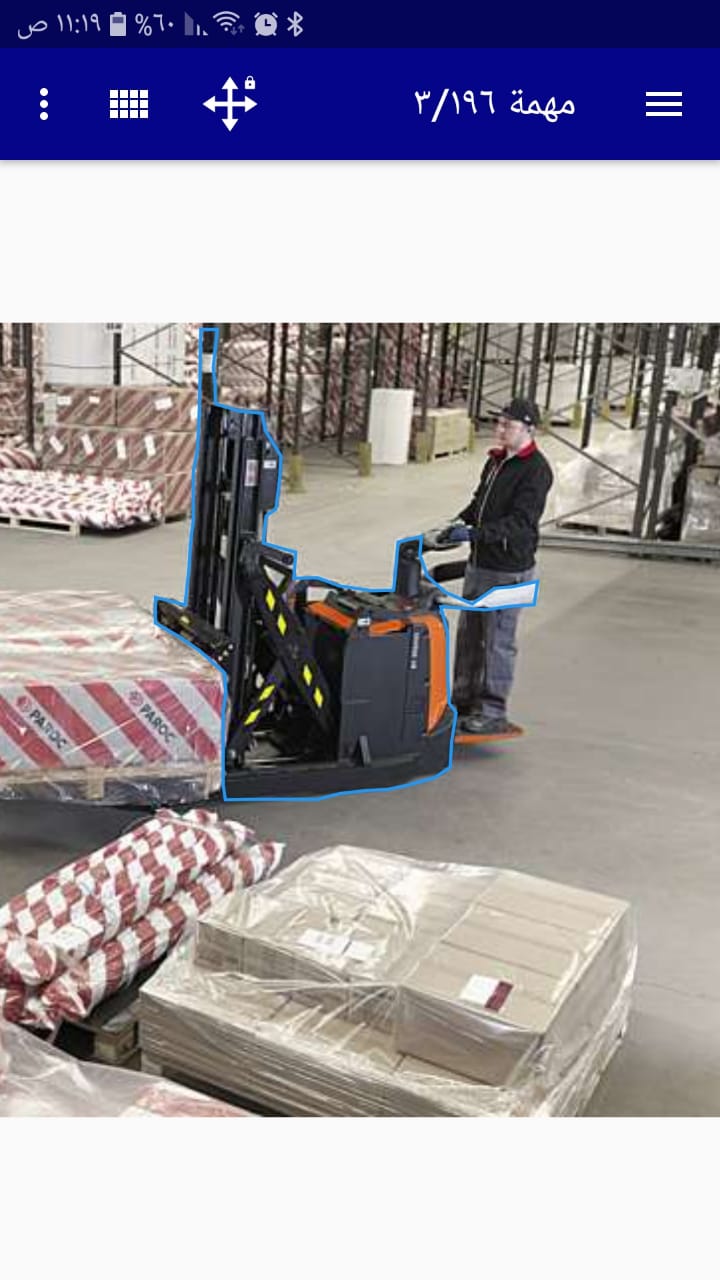

Non-standard objects where simply tracking by localizing the object with only rectangle (i.e. bounding box format) would lead to non-performing machine learning model. Imagine, large material handling machines, or any manufacturing facilities, wires or other complex scenes.

When the object is non compact and may split into pieces, the segments still can be boxed into the same bounding box, while shape is still annotated as a polygon.

Imagine training the machine learning with bounding box in this example? There are cases, where the noise on images make it very difficult.

Or nearly impossible, when the ultimate boundaries of the object may be a size of entire cropped image, which is a usual case for many street view camera use cases

Significant cost optimization for machine learning engineers that may continuously enhance its machine learning model. Allowing to annotate the instance at the price of polygon only.

Open-source community. Use the ever growing library to optimize the results of your own machine learning model.